A) Define security group(s) to allow all HTTP inbound/outbound traffic and assign those security group(s) to the Amazon SageMaker notebook instance.

B) ?onfigure the Amazon SageMaker notebook instance to have access to the VPC. Grant permission in the KMS key policy to the notebook's KMS role.

C) Assign an IAM role to the Amazon SageMaker notebook with S3 read access to the dataset. Grant permission in the KMS key policy to that role.

D) Assign the same KMS key used to encrypt data in Amazon S3 to the Amazon SageMaker notebook instance.

F) None of the above

Correct Answer

verified

D

Correct Answer

verified

Multiple Choice

A Machine Learning Specialist needs to be able to ingest streaming data and store it in Apache Parquet files for exploration and analysis. Which of the following services would both ingest and store this data in the correct format?

A) AWS DMS

B) Amazon Kinesis Data Streams

C) Amazon Kinesis Data Firehose

D) Amazon Kinesis Data Analytics

F) A) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A Machine Learning Specialist is designing a system for improving sales for a company. The objective is to use the large amount of information the company has on users' behavior and product preferences to predict which products users would like based on the users' similarity to other users. What should the Specialist do to meet this objective?

A) Build a content-based filtering recommendation engine with Apache Spark ML on Amazon EMR

B) Build a collaborative filtering recommendation engine with Apache Spark ML on Amazon EMR.

C) Build a model-based filtering recommendation engine with Apache Spark ML on Amazon EMR

D) Build a combinative filtering recommendation engine with Apache Spark ML on Amazon EMR

F) A) and B)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A Machine Learning Specialist working for an online fashion company wants to build a data ingestion solution for the company's Amazon S3-based data lake. The Specialist wants to create a set of ingestion mechanisms that will enable future capabilities comprised of: Real-time analytics Interactive analytics of historical data Clickstream analytics Product recommendations Which services should the Specialist use?

A) AWS Glue as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for real-time data insights; Amazon Kinesis Data Firehose for delivery to Amazon ES for clickstream analytics; Amazon EMR to generate personalized product recommendations

B) Amazon Athena as the data catalog: Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for near-real-time data insights; Amazon Kinesis Data Firehose for clickstream analytics; AWS Glue to generate personalized product recommendations

C) AWS Glue as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for historical data insights; Amazon Kinesis Data Firehose for delivery to Amazon ES for clickstream analytics; Amazon EMR to generate personalized product recommendations

D) Amazon Athena as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for historical data insights; Amazon DynamoDB streams for clickstream analytics; AWS Glue to generate personalized product recommendations

F) C) and D)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A company will use Amazon SageMaker to train and host a machine learning (ML) model for a marketing campaign. The majority of data is sensitive customer data. The data must be encrypted at rest. The company wants AWS to maintain the root of trust for the master keys and wants encryption key usage to be logged. Which implementation will meet these requirements?

A) Use encryption keys that are stored in AWS Cloud HSM to encrypt the ML data volumes, and to encrypt the model artifacts and data in Amazon S3.

B) Use SageMaker built-in transient keys to encrypt the ML data volumes. Enable default encryption for new Amazon Elastic Block Store (Amazon EBS) volumes.

C) Use customer managed keys in AWS Key Management Service (AWS KMS) to encrypt the ML data volumes, and to encrypt the model artifacts and data in Amazon S3.

D) Use AWS Security Token Service (AWS STS) to create temporary tokens to encrypt the ML storage volumes, and to encrypt the model artifacts and data in Amazon S3.

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A city wants to monitor its air quality to address the consequences of air pollution. A Machine Learning Specialist needs to forecast the air quality in parts per million of contaminates for the next 2 days in the city. As this is a prototype, only daily data from the last year is available. Which model is MOST likely to provide the best results in Amazon SageMaker?

A) Use the Amazon SageMaker k-Nearest-Neighbors (kNN) algorithm on the single time series consisting of the full year of data with a predictor_type of regressor . Use the Amazon SageMaker k-Nearest-Neighbors (kNN) algorithm on the single time series consisting of the full year of data with a predictor_type of regressor .

B) Use Amazon SageMaker Random Cut Forest (RCF) on the single time series consisting of the full year of data.

C) Use the Amazon SageMaker Linear Learner algorithm on the single time series consisting of the full year of data with a predictor_type of regressor . Use the Amazon SageMaker Linear Learner algorithm on the single time series consisting of the full year of data with a

D) Use the Amazon SageMaker Linear Learner algorithm on the single time series consisting of the full year of data with a predictor_type of classifier . classifier

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

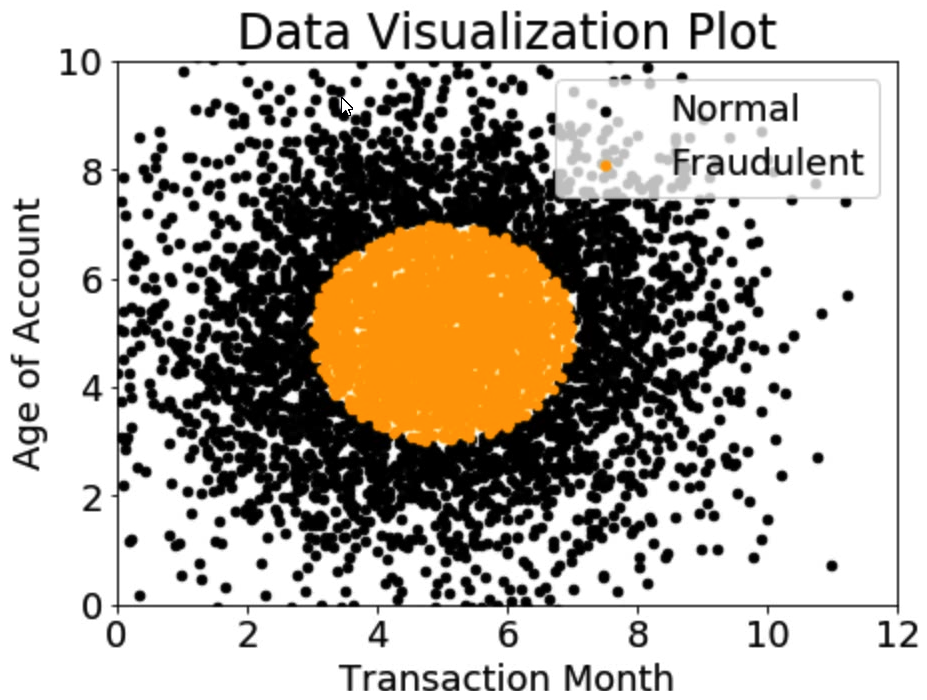

A company wants to classify user behavior as either fraudulent or normal. Based on internal research, a Machine Learning Specialist would like to build a binary classifier based on two features: age of account and transaction month. The class distribution for these features is illustrated in the figure provided.  Based on this information, which model would have the HIGHEST recall with respect to the fraudulent class?

Based on this information, which model would have the HIGHEST recall with respect to the fraudulent class?

A) Decision tree

B) Linear support vector machine (SVM)

C) Naive Bayesian classifier

D) Single Perceptron with sigmoidal activation function

F) All of the above

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A company has set up and deployed its machine learning (ML) model into production with an endpoint using Amazon SageMaker hosting services. The ML team has configured automatic scaling for its SageMaker instances to support workload changes. During testing, the team notices that additional instances are being launched before the new instances are ready. This behavior needs to change as soon as possible. How can the ML team solve this issue?

A) Decrease the cooldown period for the scale-in activity. Increase the configured maximum capacity of instances.

B) Replace the current endpoint with a multi-model endpoint using SageMaker.

C) Set up Amazon API Gateway and AWS Lambda to trigger the SageMaker inference endpoint.

D) Increase the cooldown period for the scale-out activity.

F) A) and D)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A company ingests machine learning (ML) data from web advertising clicks into an Amazon S3 data lake. Click data is added to an Amazon Kinesis data stream by using the Kinesis Producer Library (KPL) . The data is loaded into the S3 data lake from the data stream by using an Amazon Kinesis Data Firehose delivery stream. As the data volume increases, an ML specialist notices that the rate of data ingested into Amazon S3 is relatively constant. There also is an increasing backlog of data for Kinesis Data Streams and Kinesis Data Firehose to ingest. Which next step is MOST likely to improve the data ingestion rate into Amazon S3?

A) Increase the number of S3 prefixes for the delivery stream to write to.

B) Decrease the retention period for the data stream.

C) Increase the number of shards for the data stream.

D) Add more consumers using the Kinesis Client Library (KCL) .

F) A) and D)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A machine learning (ML) specialist must develop a classification model for a financial services company. A domain expert provides the dataset, which is tabular with 10,000 rows and 1,020 features. During exploratory data analysis, the specialist finds no missing values and a small percentage of duplicate rows. There are correlation scores of > 0.9 for 200 feature pairs. The mean value of each feature is similar to its 50th percentile. Which feature engineering strategy should the ML specialist use with Amazon SageMaker?

A) Apply dimensionality reduction by using the principal component analysis (PCA) algorithm.

B) Drop the features with low correlation scores by using a Jupyter notebook.

C) Apply anomaly detection by using the Random Cut Forest (RCF) algorithm.

D) Concatenate the features with high correlation scores by using a Jupyter notebook.

F) A) and C)

Correct Answer

verified

C

Correct Answer

verified

Multiple Choice

A Machine Learning Specialist is building a model that will perform time series forecasting using Amazon SageMaker. The Specialist has finished training the model and is now planning to perform load testing on the endpoint so they can configure Auto Scaling for the model variant. Which approach will allow the Specialist to review the latency, memory utilization, and CPU utilization during the load test?

A) Review SageMaker logs that have been written to Amazon S3 by leveraging Amazon Athena and Amazon QuickSight to visualize logs as they are being produced.

B) Generate an Amazon CloudWatch dashboard to create a single view for the latency, memory utilization, and CPU utilization metrics that are outputted by Amazon SageMaker.

C) Build custom Amazon CloudWatch Logs and then leverage Amazon ES and Kibana to query and visualize the log data as it is generated by Amazon SageMaker.

D) Send Amazon CloudWatch Logs that were generated by Amazon SageMaker to Amazon ES and use Kibana to query and visualize the log data

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A technology startup is using complex deep neural networks and GPU compute to recommend the company's products to its existing customers based upon each customer's habits and interactions. The solution currently pulls each dataset from an Amazon S3 bucket before loading the data into a TensorFlow model pulled from the company's Git repository that runs locally. This job then runs for several hours while continually outputting its progress to the same S3 bucket. The job can be paused, restarted, and continued at any time in the event of a failure, and is run from a central queue. Senior managers are concerned about the complexity of the solution's resource management and the costs involved in repeating the process regularly. They ask for the workload to be automated so it runs once a week, starting Monday and completing by the close of business Friday. Which architecture should be used to scale the solution at the lowest cost?

A) Implement the solution using AWS Deep Learning Containers and run the container as a job using AWS Batch on a GPU-compatible Spot Instance

B) Implement the solution using a low-cost GPU-compatible Amazon EC2 instance and use the AWS Instance Scheduler to schedule the task

C) Implement the solution using AWS Deep Learning Containers, run the workload using AWS Fargate running on Spot Instances, and then schedule the task using the built-in task scheduler

D) Implement the solution using Amazon ECS running on Spot Instances and schedule the task using the ECS service scheduler

F) C) and D)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A Marketing Manager at a pet insurance company plans to launch a targeted marketing campaign on social media to acquire new customers. Currently, the company has the following data in Amazon Aurora: Profiles for all past and existing customers Profiles for all past and existing insured pets Policy-level information Premiums received Claims paid What steps should be taken to implement a machine learning model to identify potential new customers on social media?

A) Use regression on customer profile data to understand key characteristics of consumer segments. Find similar profiles on social media

B) Use clustering on customer profile data to understand key characteristics of consumer segments. Find similar profiles on social media

C) Use a recommendation engine on customer profile data to understand key characteristics of consumer segments. Find similar profiles on social media.

D) Use a decision tree classifier engine on customer profile data to understand key characteristics of consumer segments. Find similar profiles on social media.

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A Data Scientist is working on an application that performs sentiment analysis. The validation accuracy is poor, and the Data Scientist thinks that the cause may be a rich vocabulary and a low average frequency of words in the dataset. Which tool should be used to improve the validation accuracy?

A) Amazon Comprehend syntax analysis and entity detection

B) Amazon SageMaker BlazingText cbow mode Amazon SageMaker BlazingText cbow mode

C) Natural Language Toolkit (NLTK) stemming and stop word removal

D) Scikit-leam term frequency-inverse document frequency (TF-IDF) vectorizer

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A company wants to predict the sale prices of houses based on available historical sales data. The target variable in the company's dataset is the sale price. The features include parameters such as the lot size, living area measurements, non-living area measurements, number of bedrooms, number of bathrooms, year built, and postal code. The company wants to use multi-variable linear regression to predict house sale prices. Which step should a machine learning specialist take to remove features that are irrelevant for the analysis and reduce the model's complexity?

A) Plot a histogram of the features and compute their standard deviation. Remove features with high variance.

B) Plot a histogram of the features and compute their standard deviation. Remove features with low variance.

C) Build a heatmap showing the correlation of the dataset against itself. Remove features with low mutual correlation scores.

D) Run a correlation check of all features against the target variable. Remove features with low target variable correlation scores.

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

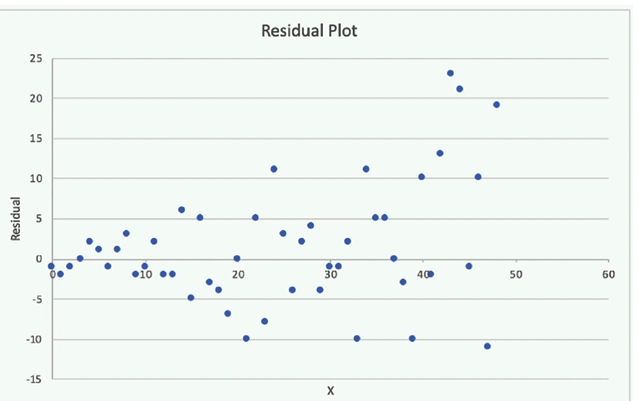

A Machine Learning Specialist is attempting to build a linear regression model.  Given the displayed residual plot only, what is the MOST likely problem with the model?

Given the displayed residual plot only, what is the MOST likely problem with the model?

A) Linear regression is inappropriate. The residuals do not have constant variance.

B) Linear regression is inappropriate. The underlying data has outliers.

C) Linear regression is appropriate. The residuals have a zero mean.

D) Linear regression is appropriate. The residuals have constant variance.

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

An office security agency conducted a successful pilot using 100 cameras installed at key locations within the main office. Images from the cameras were uploaded to Amazon S3 and tagged using Amazon Rekognition, and the results were stored in Amazon ES. The agency is now looking to expand the pilot into a full production system using thousands of video cameras in its office locations globally. The goal is to identify activities performed by non-employees in real time Which solution should the agency consider?

A) Use a proxy server at each local office and for each camera, and stream the RTSP feed to a unique Amazon Kinesis Video Streams video stream. On each stream, use Amazon Rekognition Video and create a stream processor to detect faces from a collection of known employees, and alert when non-employees are detected.

B) Use a proxy server at each local office and for each camera, and stream the RTSP feed to a unique Amazon Kinesis Video Streams video stream. On each stream, use Amazon Rekognition Image to detect faces from a collection of known employees and alert when non-employees are detected.

C) Install AWS DeepLens cameras and use the DeepLens_Kinesis_Video module to stream video to Amazon Kinesis Video Streams for each camera. On each stream, use Amazon Rekognition Video and create a stream processor to detect faces from a collection on each stream, and alert when non-employees are detected. Install AWS DeepLens cameras and use the DeepLens_Kinesis_Video module to stream video to Amazon Kinesis Video Streams for each camera. On each stream, use Amazon Rekognition Video and create a stream processor to detect faces from a collection on each stream, and alert when non-employees are detected.

D) Install AWS DeepLens cameras and use the DeepLens_Kinesis_Video module to stream video to Amazon Kinesis Video Streams for each camera. On each stream, run an AWS Lambda function to capture image fragments and then call Amazon Rekognition Image to detect faces from a collection of known employees, and alert when non-employees are detected. module to stream video to Amazon Kinesis Video Streams for each camera. On each stream, run an AWS Lambda function to capture image fragments and then call Amazon Rekognition Image to detect faces from a collection of known employees, and alert when non-employees are detected.

F) A) and B)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

When submitting Amazon SageMaker training jobs using one of the built-in algorithms, which common parameters MUST be specified? (Choose three.)

A) The training channel identifying the location of training data on an Amazon S3 bucket.

B) The validation channel identifying the location of validation data on an Amazon S3 bucket.

C) The IAM role that Amazon SageMaker can assume to perform tasks on behalf of the users.

D) Hyperparameters in a JSON array as documented for the algorithm used.

E) The Amazon EC2 instance class specifying whether training will be run using CPU or GPU.

F) The output path specifying where on an Amazon S3 bucket the trained model will persist.

H) B) and F)

Correct Answer

verified

A,E,F

Correct Answer

verified

Multiple Choice

An aircraft engine manufacturing company is measuring 200 performance metrics in a time-series. Engineers want to detect critical manufacturing defects in near-real time during testing. All of the data needs to be stored for offline analysis. What approach would be the MOST effective to perform near-real time defect detection?

A) Use AWS IoT Analytics for ingestion, storage, and further analysis. Use Jupyter notebooks from within AWS IoT Analytics to carry out analysis for anomalies.

B) Use Amazon S3 for ingestion, storage, and further analysis. Use an Amazon EMR cluster to carry out Apache Spark ML k-means clustering to determine anomalies.

C) Use Amazon S3 for ingestion, storage, and further analysis. Use the Amazon SageMaker Random Cut Forest (RCF) algorithm to determine anomalies.

D) Use Amazon Kinesis Data Firehose for ingestion and Amazon Kinesis Data Analytics Random Cut Forest (RCF) to perform anomaly detection. Use Kinesis Data Firehose to store data in Amazon S3 for further analysis.

F) A) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

Which of the following metrics should a Machine Learning Specialist generally use to compare/evaluate machine learning classification models against each other?

A) Recall

B) Misclassification rate

C) Mean absolute percentage error (MAPE)

D) Area Under the ROC Curve (AUC)

F) A) and C)

Correct Answer

verified

Correct Answer

verified

Showing 1 - 20 of 159

Related Exams